Tar (Tape Archive) is a popular file archiving format in Linux. It can be used together with gzip (tar.gz) or bzip2 (tar.bz2) for compression. It is the most widely used command line utility to create compressed archive files (packages, source code, databases and so much more) that can be transferred easily from machine to another or over a network.

Read Also: 18 Tar Command Examples in Linux

In this article, we will show you how to download tar archives using two well known command line downloaders – wget or cURL and extract them with one single command.

How to Download and Extract File Using Wget Command

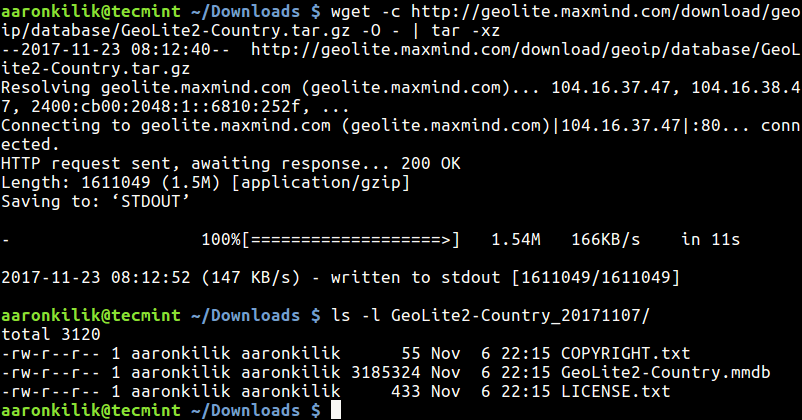

The example below shows how to download, unpack the latest GeoLite2 Country databases (use by the GeoIP Nginx module) in the current directory.

# wget -c http://geolite.maxmind.com/download/geoip/database/GeoLite2-Country.tar.gz -O - | tar -xz

The wget option -O specifies a file to which the documents is written, and here we use -, meaning it will written to standard output and piped to tar and the tar flag -x enables extraction of archive files and -z decompresses, compressed archive files created by gzip.

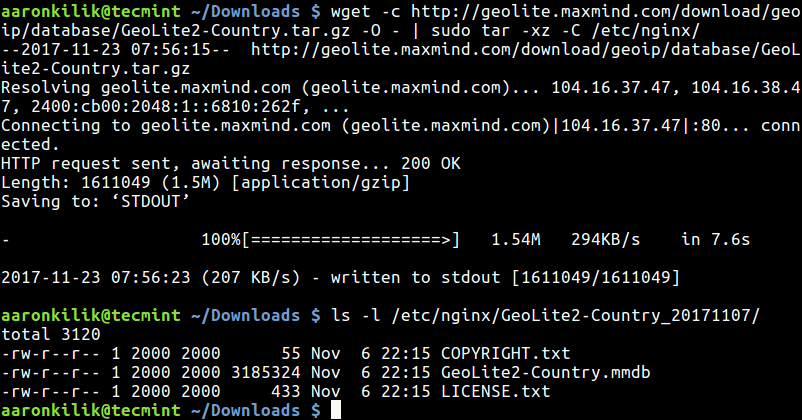

To extract tar files to specific directory, /etc/nginx/ in this case, include use the -C flag as follows.

Note: If extracting files to particular directory that requires root permissions, use the sudo command to run tar.

$ sudo wget -c http://geolite.maxmind.com/download/geoip/database/GeoLite2-Country.tar.gz -O - | sudo tar -xz -C /etc/nginx/

Alternatively, you can use the following command, here, the archive file will be downloaded on your system before you can extract it.

$ sudo wget -c http://geolite.maxmind.com/download/geoip/database/GeoLite2-Country.tar.gz && tar -xzf GeoLite2-Country.tar.gz

To extract compressed archive file to a specific directory, use the following command.

$ sudo wget -c http://geolite.maxmind.com/download/geoip/database/GeoLite2-Country.tar.gz && sudo tar -xzf GeoLite2-Country.tar.gz -C /etc/nginx/

How to Download and Extract File Using cURL Command

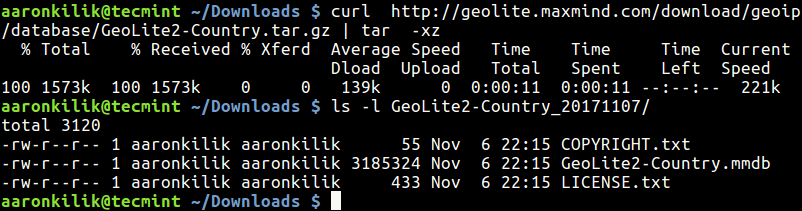

Considering the previous example, this is how you can use cURL to download and unpack archives in the current working directory.

$ sudo curl http://geolite.maxmind.com/download/geoip/database/GeoLite2-Country.tar.gz | tar -xz

To extract file to different directory while downloading, use the following command.

$ sudo curl http://geolite.maxmind.com/download/geoip/database/GeoLite2-Country.tar.gz | sudo tar -xz -C /etc/nginx/ OR $ sudo curl http://geolite.maxmind.com/download/geoip/database/GeoLite2-Country.tar.gz && sudo tar -xzf GeoLite2-Country.tar.gz -C /etc/nginx/

That’s all! In this short but useful guide, we showed you how to download and extract archive files in one single command. If you have any queries, use the comment section below to reach us.

Not to be picky but… In all your examples you may be doing ONE operation but you are executing TWO separate commands. Depending on the example, you are either piping

(|)wget and cURL commands into a tar command, or you are chaining (&&) wget and cURL commands with tar command.I know that when commands are chained with ‘&&’, the command following the double ampersand does not get executed if the command preceding the ampersands fails. But what is piped into tar in case wget or cURL commands fail for some reason?

Therefore, shouldn’t the title be “How to Download and Extract Tar Files in One Operation”? It’s a minor quibble, I know.

When I saw the topic under Learn Linux Tricks & Tips, I was intrigued. How does he do that? Turns out the only difference is that you are combining what most of us do in two steps into one.

Oh you were being literal. I thought this was something else. Maybe make a tutorial on pipes instead?

@TheOuterLinux

” I thought this was something else”, not really. It is not any thing new, but i just described some of the possible and easy ways to save time while downloading TAR archives with wget and curl commands. I know this can be helpful to newbies.